How Search Engines Works? The Basics of Crawling, Indexing & Ranking:

In the last Post of SEO Guide, we have learnt about Why you need SEO? And its importance? Also, we have gone through the Topics of Webmaster Guidelines, especially Google Webmaster Guidelines.

The previous Post thought us about the Search Engines and how they work in order to find the right content over the Internet and submit to the Person who is searching for that content over search engine.

The hardest part of SEO is to make your Website, Pages and Posts to make visible on Search Engines. To make this possible there are things on which we need to work on which is called as How to make your Website rank among the Search Engine Results Pages (SERP’s).

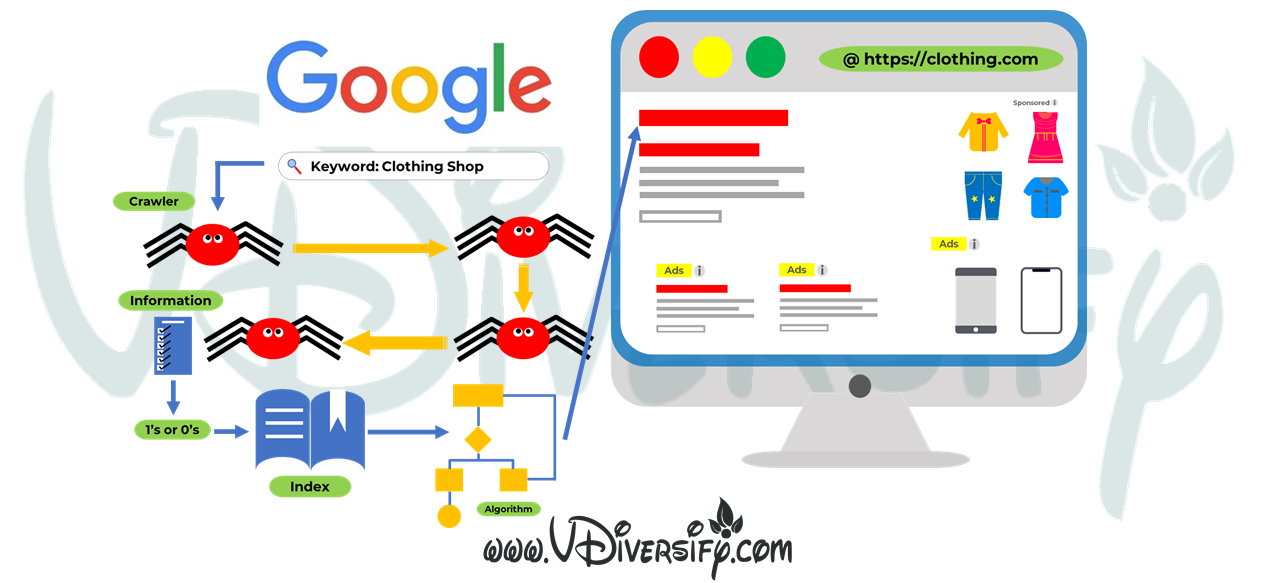

To put in simple terms the Search Engine majorly stands on 3 Key Technical Words.

1. Crawl: Do an audit over the Web against the Search Keyword that the User has typed-in over search engine. Find the most suitable Content, Pages, Posts and Websites and show on SERP’s.

2. Index: A Library of Storage of Search Content over the Web during the process of Crawling. Gather all the content and data ans store securely and make it visible on SERP’s.

3. Rank: Show the search results of the Content that exactly or nearly matches and answers the search query of the User. In simple words order by most relevant to least relevant.

As we have already gone through Search Engine Crawling and Search

Engine Indexing in our previous Post we will not be going in detail as of now.

We have also covered the Topic of Basics on how the Search Engine Works?, Search Engine Crawling & Indexing. If you want check out, Please Visit here. What Is SEO? Types of SEO | SEO Learning Guide

Now, we all know how much it is important for us to ensure that getting your Website Crawled and Indexed matters a lot so that it can appear in SERP’s.

Now let’s focus our self on how this can be achieved? Let’s see how many of your Websites Pages have got Indexed? Once you see you will definitely understand whether the Google is Indexing your Pages or Not and are, they Visible or not.

The most easiest way of checking your Website’s visibility on search engines is to enter “site:yourdomain.com” in Google Search Engine Bar.

i.e Go to Google.in or Google.com and type in “site:yourdomain.com” into the search bar. Then the Google will show up the results which it has Indexed. If not Indexed then the Search Result will show nothing to show for this query.

For Example for us we need to type as site:vdiversify.com in Google Search Bar.

So now you no which of your Website’s Pages got Indexed and Visible on Search Engine. Now you can go back to your Websites Control Panel and understand or make changes to your Website.

This type of approach to know your Website’s presence over Google Search Engine is not going to give you all the clear and complete results.

To get more accurate and complete details about your Website’s presence over the Internet, Sign-in to Google Search Console.

You can Sign Up for a free Google Search Console Account if you’re not having one. The Google Search Console offers you with accurate results of your Website’s Performance including the complete Indexed Coverage Report. You can Monitor the results and start working on fixing the errors or issues to make the Website SEO friendly.

To do that you need to Submit your Website’s Sitemap to Google Search Console, then only you can see your Website’s Performance and Coverage Report.

Below are the Reasons for your Website not to appearing anywhere in Search Engines or Search Results.

1. Your Website is Brand New and not yet crawled by Google

2. You haven’t submitted your Website to Google Search Console

3. You haven’t submitted your Sitemap to Google Search Console for Indexing

4. Your Website haven’t liked to any of the External Websites

5. Your Website haven’t linked to any of the Social Media Accounts

6. Your Website’s Navigation makes it hard for a Robot or Google Bot to Crawl it effectively

7. You have not submitted Robots.txt to Google

8. Your Website not effectively maintained for Robots.txt and makes difficult for Search Engines to Crawl and Identify your Website

In most of the cases what happens is that there are few Pages which you don’t want Google to Index them. Which is may being Duplicate Pages, Old URL’s or Duplicate URL’s, etc. which are having very less content or in case of e-commerce websites, etc. and so on.

These Pages or URL’s can be made excluded in Indexing of Google making the Google Bot to direct away from such links is to use Robots.txt.

Robots.txt is a standard used by websites to communicate with web crawlers and other web robots. The standard specifies how to inform the web robot like Google Bot about which areas of the website should not be processed or scanned.

In other words, Robots.txt tells search engine crawlers which Pages or the Files the Crawler can or can’t request from your site.

The main reason why the Robots.txt are used is to avoid any kind of overloading your Website with requests. Like said earlier if you want to keep Google out of few Pages then you should use No-Index Directives or Password-Protect your Page.

Robots.txt is used primarily to manage crawler traffic to your site, and usually to keep a page off Google, depending on the file type: Web Page, Media File and Resource File.

Let us understand the Limitations of Robots.txt URL blocking method. There are other scenarios or methods to ensure that your URL’s are not findable on the Web.

1. Robots.txt directives may not be supported by all search engines

The Crawlers will obey the things or instruction’s or information that you have provided and defined in Robots.txt. So as said earlier if you want to keep any information secure from web crawlers, need to adopt other methods of blocking like Password-Protecting Files on Your Server.

2. Different crawlers interpret syntax differently

Although respectable web crawlers follow the directives in a robots.txt file, each crawler might interpret the directives differently. You should know the proper syntax for addressing different web crawlers as some might not understand certain instructions.

3. A robotted page can still be indexed if linked to from other sites

The Google Bot can still index your Page if its linked from other places on the web even though the URL’s blocked by Robots.txt. This means the URL Address and other publicly available information like anchor text or links to pages might still appear in Google Search results.

1. If Googlebot can’t find a robots.txt file for a site, it proceeds to crawl the site.

2. If Googlebot finds a robots.txt file for a site, it will usually abide by the suggestions and proceed to crawl the site.

3. If Googlebot encounters an error while trying to access a site’s robots.txt file and can’t determine if one exists or not, it won’t crawl the site.

Note: The Robots.txt files are located in the root directory of your websites (For Example: yourdomain.com/robots.txt).

Once you are inside Google Search Console and submitted your Website’s Sitemap, Google will start Crawling your Content and Pages. While doing so the Crawler may encounter Errors.

To see and access these Crawl Errors Report in Google Search Console and see which are the URL’s which have found with Errors.

Here Are Few Examples of Crawl Errors Which Occasionally Found While Crawling:

1. 4xx Codes: When Search Engine Crawlers can’t access your Content due to a Client Error

2. 5xx Codes: When Search Engine Crawler’s can’t access your Content due to a Server Error

Once the Crawling part of Search Engines is ensured and addressed, it’s time to understand and move on to the Next Topic, i.e. Indexing.

The Crawling part of Search Engines doesn’t ensure you that your Content and Pages will be stored in their Index. Once the Crawler finds your Page it renders it same-a-like a browser and then analyzes all the Content inside it and later all of that Information is going to be stored in its Index.

To see the Crawled Version of your Website’s Page, visit Google Search Bar and type in your Website URL. Once you do so it will fetch all the results related to your Website.

Google offers you to see the Cached Version of your Page when you search for your Website over Google Search Bar. This will be a Snapshot of your Page which was last time Crawled by Google Bot.

Here to be noted that the Google Caches the Web Pages in high frequencies for the Websites which Posts more frequently than the Websites which Posts at very low frequencies.

Yes, there some criteria on basis of which Pages or URL’s are being removed from Indexing.

Let’s go through few of the Criteria’s which makes URL’s being removed from the Index:

1. When searched for any content, the URL might answer and return as “Not Found” Error (4XX) or Server Error (5XX) – This happens when the Page have been moved to different URL and the 301 redirect was not setup on the Website for that particular URL

2. You have added a No-Index Tag for the URL by accidentally or while installing and setting up a Plugin – Or you have done it on purposefully as you wanted to exclude a Page from Indexing

3. The other case may be one of your Page has been penalized for violating the search engine’s Webmaster Guidelines

4. The URL has been blocked via Password-Protect so that the Page can’t be accessed

The URL Inspection tool provides information about Google’s indexed version of a specific page. This Information includes AMP errors, structured data errors, and indexing issues.

There may be scenarios where-in a Page was Indexed by Google for some reason it was later removed or not showing up on search results, then you can make use of the Google’s URL Inspection Tool to verify the same.

There is one more option of Fetch as Google from Google which will also has a Requesting Index Feature, where in you can submit any of your individual URL’s to get Indexed.

There are few common Tasks which you can perform on URL Inspection Tool:

1. Request and see the current Index status of a URL: You can retrieve information about Google’s indexed version of your page and Check why Google could or couldn’t index your page

2. Inspect a live URL: Test whether a page on your site is able to be indexed or not

3. Request indexing for a URL: You can request that any URL to be crawled (or re-crawled) by Google

4. View a rendered version of the page: See a screenshot of how Google Bot sees the page

5. View loaded resources list, JavaScript output, and other information: See a list of resources, page code, and more information by clicking the more information link on the page verdict card.

Yes, you can instruct and tell search engines to how to Index my Website. For this there are 3 Options you can chose, work on and customize.

1. Robots Meta Directives

2. Robots Meta Tag

3. X-Robots Tag

Robots Meta Directives:

Meta Directives are also called as Meta Tags. These are a set of directives which you can use them to instruct search engines on how to treat My Website.

The work of Robots Meta Tags shall be defined in between Header Tags () of your Website Theme Editor.

You can instruct search engines like, “do not index this page in search results” or “don’t pass any link equity to any on-page links”.

Robots Meta Tag:

These Tags can help you to exclude all or specific search engines to be crawled for. These are the Meta Tags which can be defined in between Header Tags () of your Website’s Theme Editor.

This also contains 3 Types of Meta Directives, viz, Index/No-Index, Follow/Un-follow and No Archive.

Index: By default, all Pages or Posts or Categories are going to be Crawled and Index. This means you are allowing search engines to Crawl on to Website’s all over content.

No-Index: This is a scenario where you are restricting the search engines not to Crawl on to. Hence these Pages or Posts or Categories are not going to excluded and not Indexed.

Follow: This means that you are telling the search engines follow the links on Pages or Posts and pass the link equity through those URL’s. By default, the Pages or Posts assumed to be followed.

No-Follow: This means that you are telling the search engines not to follow the links on Pages or Posts and do not pass the link equity through those URL’s.

No-Archives: This means that you are putting a restriction for the search engines from saving the cached copy of the Page.

Note: To set-up these you can make use of the Popular Plugins called Rank Math or Yoast SEO.

X-Robots Tag:

The X-Robots-Tag can be used as an element of the HTTP header response for a given URL. Any directive that can be used in a robots meta tag can also be specified as an X-Robots-Tag.

The main advantage here is that, you can use multiple X-Robots-Tag headers can be combined within the HTTP response, or you can specify a comma-separated list of directives.

For more information on Meta Robot Tags, explore Google’s Robots Meta Tag Specifications.

We have covered almost all topics related to Crawling, Indexing and few Parts of Ranking. Hope you guys have understood most parts of the Post in-detail.

In the Next Post, we will cover the rest part of How Search Engine’s Work? i.e. The Ranking of URL’s in Search Engines.

So, Stay Tuned for the same.

“Hey, I am Sachin Ramdurg, the founder of VDiversify.com.

I am an Engineer and Passionate Blogger with a mindset of Entrepreneurship. I have been experienced in Blogging for more than 5+ years and following as a youtuber along with blogging, online business ideas, affiliate marketing, and make money online ideas since 2012.

1 thought on “SEO | How Search Engines Works? The Basics of Crawling, Indexing & Ranking_Part_1:”